Humanoid robots are also at the stage where software choices become just as important as hardware does. On factory floors, the distinction between a novel demonstration and an implementation machine is now reduced to the ability of an artificial intelligence framework to sense, think, and act with the physical restraint that factories require.

The eye catcher is the title, which is AI runs the robot. The engineering truth is even narrower: foundation models are being strained to be used as control layers, and the rest of the stack, including training pipelines, safety systems, compute, and governance, must grow quickly enough to keep up.

These are the main building blocks and fault lines that form that change, whether it is the Gemini robotic dreams of Google or the less glamorous but defining plumbing that defines the role of humanoids in the vicinity of individuals and pallets.

1. Gemini Robotics as the control layer, not just a chatbot

Google DeepMind presented Gemini Robotics as a project to introduce AI to the real world and Senior Director Carolina Parada added, We are excited to start working with the Boston Dynamics team to understand what can be done with their new Atlas robot as we train new models to expand the scope of robotics and to safely and efficiently scale robots. The significance of that partnership is in the fact that it does not think of Gemini as a conversational interface, but as an embodied-work candidate brain.

In the case of industrial engineering teams, the concept of taking control does not imply that one model improvises. It implies that the model is capable of relating perception to action within a highly regulated envelope: specified tasks, known tooling, tested motion libraries, safety interlocks that are always authoritative even when the AI is sure. Latency budgets, false positives, and edge-case physics cease to exist as an abstraction on the factory floor and begins to be downtime.

2. Atlas is getting productized in accordance to industrial constraints

Atlas, a new electric model introduced by Boston Dynamics is to start deployments within the Hyundai facilities, with first models taking on placements in the company Robotics Metaplant Application Center (RMAC). The production model also features a list of specifications that would appear in an inventory at the factory: 6.2 feet in height, a 7.5 foot reach, loads of up to 66 pounds, -4o to 104F operating temperatures, and an IP67 rating that would suggest that it can be hosed down when dust and grime are present.

Those figures are no marketing moustache; they are the first indicator of the targeting of humanoids. The warehouse and parts logistics reward include reach, tolerance of hot/cold loading zones and tolerance to spray-down maintenance processes. It is simply that a robot that is incapable of withstanding the banal industrial maltreatment will never have the opportunity to be intelligent in the long term.

3. Pipelines of training are becoming more of a teaching than a codifying move

The easiest path to utility in a factory environment can be a repeatable skill which can be trained, audited, and deployed across departments. An accounts of a visit to a Hyundai parts warehouse said that Atlas is trained through a process called teleoperation that is virtual reality controls or motion-capture demonstrations in such a way that repeated human behavior is data used to train the machine. According to the researcher of Boston Dynamics, Scott Kuindersma, when that teleoperator is able to execute the task that we would like the robot to execute, and execute it repeatedly, that provides us with data that we can feed into the AI models of the robot to later on execute that task itself.

It is at this point that AI on the factory floor becomes a data-engineering project. The demonstrations should be varied enough to accommodate variation (bin locations, box deformation, occlusion), should be labeled enough to be called clean, and should be scaled to test policies prior to their hitting a real aisle. The win of operations is that when a skill is proven, it becomes a software artifact, which can be shared, physical capability becomes something more akin to a fleet update.

4. Video and robot planning are becoming linked through world models

The longstanding issue with robotics has been a disconnect between seeing and doing: the world can be classified, but still, the computer fails to plan when the world wiggles. The V-JEPA 2 world model of Meta, which is trained on video, is one such effort to bridge that gap by learning physical dynamics via observation and applying the available limited robot data to relate predictions to actions. Meta characterized V-JEPA 2 as a 1.2 billion-parameter model that has been trained on over 1 million hours of video, and stated that it can only be rendered useful in terms of planning and control when only 62 hours of robot data in an action-conditioned stage are available.

The formula of industrial reality is that of giant-passive learning and tiny bits of action data. Video can be cheaply produced in factories, but the data of robot interaction of high quality is costly and dangerous. Observationally generalizable systems enable fewer physical oops moments to achieve competence, and make it more realistic to put robots into new aisles, new lighting, and new SKUs without having to re-train the intelligence.

5. The issue of safety is becoming a first-class control problem

The safety problems that humanoids pose are different compared to bolted industrial arms. According to Nathan Bivans, CTO of FORT Robotics, it can be summed up in the following words: a biped is not always in safe conditions with power cut off. By merely turning the power off to a humanoid, it was likely to fall down and pose a danger to the human operators standing around it as well as the robot itself.

Another aspect that Bivans pointed out is versatility: since machines that can reason and can adjust to an infinite variety of situations must be justified by standards that span a broader behavioral repertoire than the single-purpose traditional machines. This in practice causes the safety to be relegated to context-sensitive layers: systems which sense the proximity, speed, load condition and environment conditions continuously, and which may gracefully degrade capability instead of having to switch between full autonomy, and full dead stop. This subtlety is what determines the success of an industrial adoption since a robot that freezes too frequently is no safer either, only in a different manner: it encourages workarounds.

6. The compute stack is being tuned for agentic, multi-step work

Embodied AI does not run on inspiration; it runs on tokens, frames, and inference budgets. NVIDIA positioned the Nemotron 3 family of open models as infrastructure for “agentic AI applications,” including multi-agent systems where specialization and orchestration matter. The company stated that Nemotron 3 Nano delivers 4x higher throughput than its predecessor and supports a 1-million-token context window, while other variants scale upward for heavier reasoning workloads.

For factories, the important implication is not brand allegiance; it is architectural. As robots gain more sensors and more autonomy, teams will route tasks between models: a fast one for perception and reflexes, a deeper one for planning, and strict runtime guards that keep both inside operational boundaries. The winners will be stacks that make this routing predictable, testable, and affordable at fleet scale.

7. Labor impact is increasingly tied to “agents,” not individual apps

Automation anxiety often latches onto a single product release, but the deeper shift is structural: AI is moving from helping employees do tasks to doing portions of the tasks itself. A cited MIT estimate found 11.7% of jobs could already be automated using AI, and investors surveyed expected 2026 to intensify the shift as “agents as software” expand from productivity tools to automating work.

On factory floors, the visible change is not a humanoid replacing an entire role in one swoop. It is task capture: pallet moves, repetitive pick-and-place, night-shift inventory loops, and “go look at that” inspections. As those slices become software-defined, companies reorganize around supervision, maintenance, data collection, and continuous improvement work that looks more like managing a fleet than staffing a line.

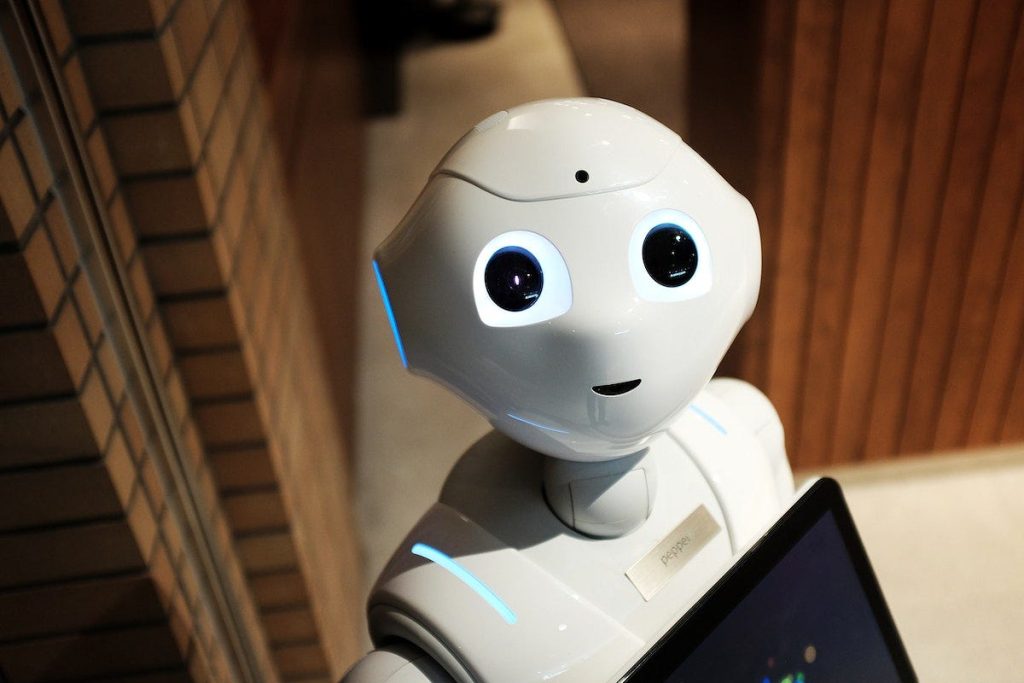

The throughline is clear: the factory is becoming the proving ground for embodied AI, where flashy capability only counts if it survives safety audits, production grime, and operational economics. The next generation of industrial robots will be judged less by how human they look and more by how reliably their AI can be boxed into behavior that factories can live with.