One of the most effective methods of making exoplanet signals resistant to tests is by having multiple teams undertake them, which is what Renyu Hu meant when she explained how contested exoplanet signals are stressed.

Such a sentence defines the location of the search of extraterrestrial technology and biology at the beginning of 2026: not at the stage of dramatic certification, but at the stage where instrumentation, catalogs, and statistical framing have reached sufficient power to create a small collection of recalcitrancies. In reality, appearances of the signs have now a tendency to resemble engineering-related quantities, such as waste heat in the mid-infrared, atmospheric chemistry revealed in transit spectra, or radio searches, which now have to first simulate the way space itself bends up signals.

Here comes the most technical and most directly current threads, which lead to the current SETI and astrobiology work -all of them incomplete in themselves, all of them more conveniently approached as systems-engineering problems than as detective stories.

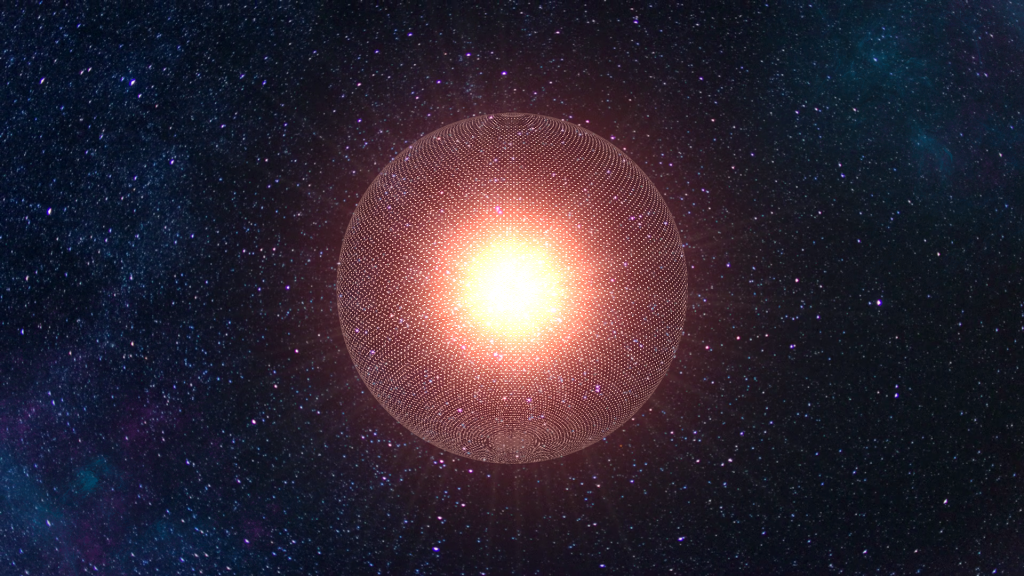

1. Mid-infrared waste heat which cannot fit the star

The most practical of technosignature concepts is among the oldest: in case a civilization harnesses stellar energy on a large scale it must release heat. Combined Gaia astrometry and all-sky infrared photometry surveys have identified 60 candidate stars that emit more in the mid-infrared than the standard stellar models would predict them to emit. In the most spectacular instances, the excess is 60-fold larger than an expected level in the relevant bands, which is consistent with the broad thermal signature due to partial swarming which partial Dyson swarm concept would give.

2. Red dwarfs that manage to survive a violent filtering

Ordinary reasons why IR excess is common in the universe mean that the real work is subtraction. In Project Hephaistos, automated vetting procedures attempt to remove false positives in dusty disks, nebular contamination, background galaxies and image artifacts. Following such a process, seven red dwarf stars within 900 light-years are left as mid-infrared excess sources of uncertain origin, a formulation that is significant in that it retains the finding in the realm of anomaly triage and not claim-making.

The interest of these surviving objects is in part due to the fact that M dwarfs are not the most comfortable location where to conceal the bright warm dust. Infrared-excess populations are detected by surveys of nearby late-type stars, although the focus highlighted on the effects of observational bias and contamination control dominate the eventual candidate lists.

3. K2 18b case study in bio signature friction

K2-18b is approximately 124 light-years distant and is now a test bed to what is the most challenging aspect of biosignature science; transform a faint spectral signal into a strong detection. Methane and carbon dioxide can also be found in the atmosphere of the planet by JWST and previous studies had hinted at dimethyl sulfide (DMS), a molecule, which on Earth is linked to biology. A later Bayesian re-examination by NASA dropped the confidence in DMS down to 2.7 sigma, which is less than the field standard of strong claims.

4. The strength and weakness of spectroscopy

Transit spectroscopy measures the starlight after it is filtered by an atmosphere to identify absorption features that identify certain molecules. This is capable of being done by the suite of instruments on JWST, NIRSpec, which is NIRISS and MIRI, at unprecedented sensitivity, although the signal remains a small modulation on the spectrum of a bright star. In the case of worlds such as K2-18b, it is not merely identifying a feature but distinguishing between similar molecules (such as DMS and the other sulfur-based compounds) and ensuring that the larger chemical ensemble is self-consistently formed.

5. The Habitable Worlds Observatory as a machine to suppress the starlight

Most of the biosignature arguments eventually reduce to a bottleneck on contrast: Earth-like planets may have 10 billion times the brightness of their host stars. The design research of HWO focuses on the suppression of the starlight using high-performance coronagraphy, with technology development paths encompassing active, deformable mirrors and stability demands that extend into the picometer regime of control. These are not an abstract specification, but the difference between a spectrum that consists mainly of residual blobs of starlight, and the spectrum in which the fingerprints of atmospheric objects can be told.

According to the planning documents given by NASA, HWO is a super-Hubble concept, where the aim is to observe 100 nearby star systems and hopefully 25 potentially earth-like planets, which should be examined further. The importance of that mission framing is that it transforms biosignatures into a relative data set, which were previously only headlines.

6. Radio SETI The silent revolution of modeling the medium

Sensitivity, bandwidth, and time on sky remain the critical factors in radio searches, although the interstellar medium is also a little-recognized bottleneck. Radio waves are scattered between stars by gas and electrons, resulting in the scintillation, or interstellar twinkle, which can distort and delay transmissions. Recent work with the Allen Telescope Array measured pulsar scintillation, and demonstrated that timing delays can be as short as tens of nanoseconds, and that they change over days to months, knowledge that can be used to correct precision experiments and give an accurate understanding of what the real propagation of astrophysical objects looks like.

7. Statistical archeology of the universe to redefine the stakes

The probability has changed even in the absence of a single technosignature confirmed. Folding the Kepler-era frequency of occurrence rates into a Drake-type calculation and posing the question of whether advanced civilizations have ever existed (not whether they exist now) researchers have suggested that the Milky Way must have had other technological civilizations unless the chances of such a civilization appearing on a habitable planet are even less than one in 60 billion. By doing this, civilization- lifetime term is removed out of the question and the problem becomes a sort of historical inference, nearer to estimations of how often rare events occur in large timescales.

To engineers, such framing is helpful since it isolates two design constraints which are commonly mixed: whether civilizations are created at all and whether they are persistent enough to overlap in time. The former influences the number of artifacts or modified systems that may be in the picture, and the latter influences the feasibility of real-time communication.

These threads collectively define a profession that is instrument-oriented more and more. The most powerful indications of this are not proclamations, but pipelines: catalogs which pass contamination tests, spectral retrieval which is resistant to independent model building, and the design of the observatory with contrast and stability as the principles, not the sheer collecting area.

The short-term goal is a smaller range of objectives and a more precise list of failure modes. And that is what it is usually like in full-fledged science: not certainty, but restrictions so formidable that the next measurement shall be decisive.