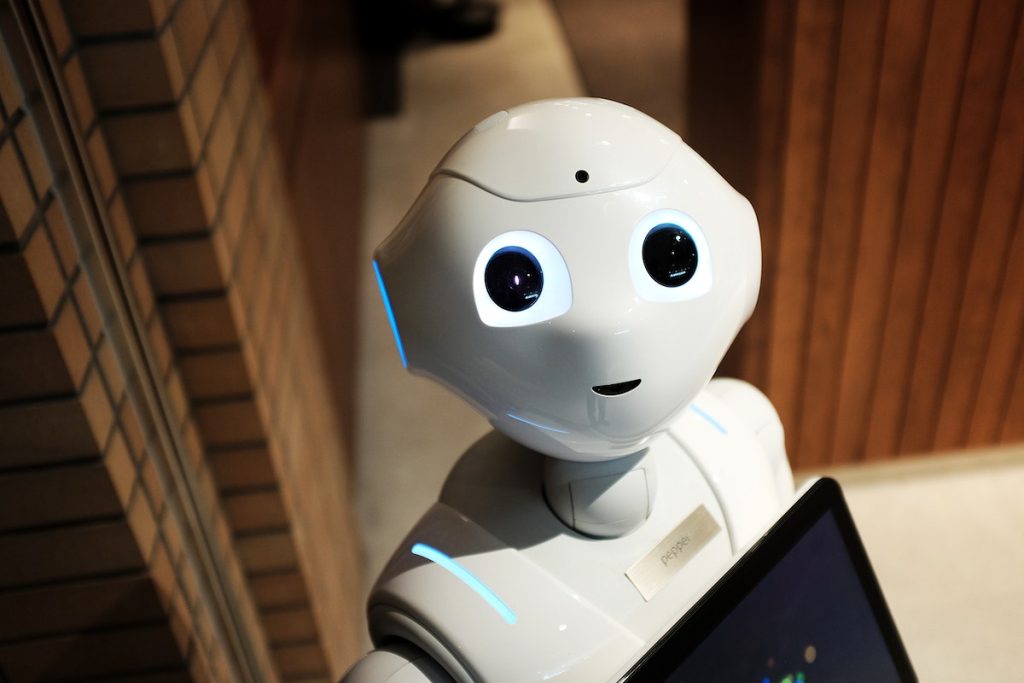

The kick of a humanoid robot sent a man tumbling to the ground, raising questions about just how close advanced robotics has come to crossing into a “physical danger zone.” Autonomous or remotely controlled, the incident points out an urgent challenge: making machines with human-like agility and strength not harm people outside controlled environments.

1. From Fictional Ethics to Engineering Constraints

Isaac Asimov’s first law of robotics-that a robot shall not injure a human-has inspired decades of ethical discourse. Real-world robots, however, have no consciousness; they are assemblies comprising actuators, sensors, control systems, and AI. The principle of nonmaleficence must therefore be implemented both as a technical constraint and as a legal duty. Standards such as BS 8611 translate these ideals into detailed engineering requirements: the identification of hazards, embedding safeguards, and accountability for robot behavior. Such guidelines address a number of quite unconventional risks, including those involving robotic deception, addictive interaction, and biased learning systems, and are thus directly applicable to humanoids that perform their job or work alongside humans.

2. Technical Design Challenges in Humanoid Safety

Humanoids pose unique safety challenges. According to Nathan Bivans, CTO at FORT Robotics, one of the most critical factors is dynamic stability. “Cutting power to a humanoid causes it to collapse, potentially injuring people standing nearby,” he said. Versatility also means added complexity: While advanced perception and physical AI enable humanoids to adapt to an almost limitless number of scenarios, their safety standards must account for this broad behavior envelope. The degradation of hardware over time, sensor interference due to dust or noise, or weak cybersecurity will also compromise safety. Integrated hardware-software design is required for robust, real-time perception and control that avoids accidents in unpredictable environments.

3. Defense Roots and the Race for Capability

History demonstrates that leaps in robotics are often rooted in defense programs. Boston Dynamics’ PETMAN and ATLAS platforms, for instance, were specifically designed and funded by DARPA for disaster response tasks; these included climbing ladders, driving vehicles, and manipulating heavy objects. These robots rely on high-performance hydraulic actuators and advanced control software, allowing dynamic motion but necessitating substantial power infrastructure. Such capabilities reflect tension in engineering for maximum performance versus embedding strict safety boundaries.

4. Vietnam’s Risk-Based AI Governance

On December 10, 2025, Vietnam passed its first Artificial Intelligence Law, effective March 1, 2026. The law institutes a four-tier risk classification-from prohibited “unacceptable risk” systems to low-risk applications-and requires more strict oversight for high-risk AI in sectors such as healthcare, finance, and infrastructure. Providers need to perform conformity assessments, add systems to the National AI Database, and implement human oversight and incident-reporting mechanisms. This framework follows the current global trend in developing AI-specific laws, with particular attention to national sovereignty and cultural stability.

5. Institutional Mechanisms for Enforcement

Effective governance requires dedicated institutions. The law of Vietnam now foresees a centralized AI coordinating body, similar to the European AI Office, in charge of developing national programs, mobilizing resources, and ensuring compliance. International models include the UK’s AI Safety Institute and Singapore’s AI Verify Foundation, illustrating how independent evaluation bodies can distill principles like transparency and accountability into verifiable criteria-from bias testing to abnormal behavior control-particularly for systems integrating physical hardware and AI software.

6. International Standards and IEEE Frameworks

The IEEE Humanoid Study Group has determined that for standards development, the key areas should be classification, stability, and human-robot interaction. For mainstream deployment, quantifiable stability metrics, predictive risk modeling, and safe interaction guidelines remain highly important. If harmonized standards do not happen, humanoid deployment will stay in controlled environments, hindering adoption even when technically ready.

7. Bridging Simulation and Real-World Adaptation

According to Ayanna Howard, physical AI has to learn to be adaptive to dynamically changing and unpredictable environments in a manner that is harmless. Simulation might prepare robots to learn about controlled variations, but how that would translate to real-world scenarios of complex social cues, environmental changes, and multitask interactions simultaneously remains unsolved. Real-time processing speed is another bottleneck: even delays of one second result in physical accidents in moving robots.

8. Identifying High-Risk AI and Ensuring Compliance

Frameworks like the seven-step categorization process under the EU AI Act will help define whether an AI system is high-risk. In the case of humanoids, their classification often rests on their intended use, profiling, or any other capability that could adversely affect health, safety, or even fundamental rights. Systems falling into high-risk categories shall be subject to obligations regarding data governance, transparency, and human oversight-exemptions being narrowly defined and publicly justified.

9. Strategic sector focus for safe deployment

Vietnam’s law encourages early standard-setting for high-impact sectors like healthcare, education, and public administration. These domains present measurable outcomes with observable risks and clear feedback loops, thus making them ideal areas for sandbox testing. Such a concentration of resources wards off inefficiency in spreading AI projects thinly across unrelated fields.

Humanoid robots are no longer confined to cinematic imagination or laboratory prototypes. As the T800’s kick demonstrates, they now are part of the physical space where engineering precision meets legal accountability. Only a fully considered synthesis of advanced robotics and AI governance-from Vietnam’s risk-based law to IEEE’s technical frameworks-will ensure that such machines become trusted collaborators rather than uncontrolled hazards.