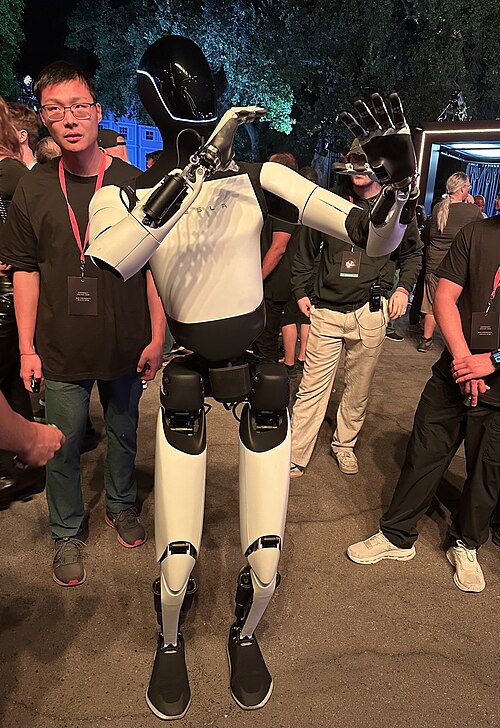

It is a scene straight out of a big-budget science fiction movie: A humanoid robot walks across the Caltech campus, stops, and launches a drone from its back. The flying machine soars over obstacles, descends, then morphs into a wheeled vehicle without human prompting to complete what it started. No special effects here-just the X1 system at work, a novel platform that melds bipedal walking, aerial flight, and ground rolling into one integrated autonomous package.

1. Combining Three Modes of Mobility into One System

At the center of X1 is a modified Unitree G1 humanoid carrying the M4Caltech’s Multi-Modal Mobility Morphobot. While the humanoid negotiates rough terrain, stairways, and narrow passages, M4 transitions smoothly from quadcopter flight to wheeled locomotion. It pushes beyond the limits imposed by single-mode robots, wherein drones have limited endurance, wheeled systems cannot climb, and most walking robots are slow or unstable. In this respect, the autonomy of X1 will automatically choose the best mode, given the mission context, to efficiently make its way through an uncertain world.

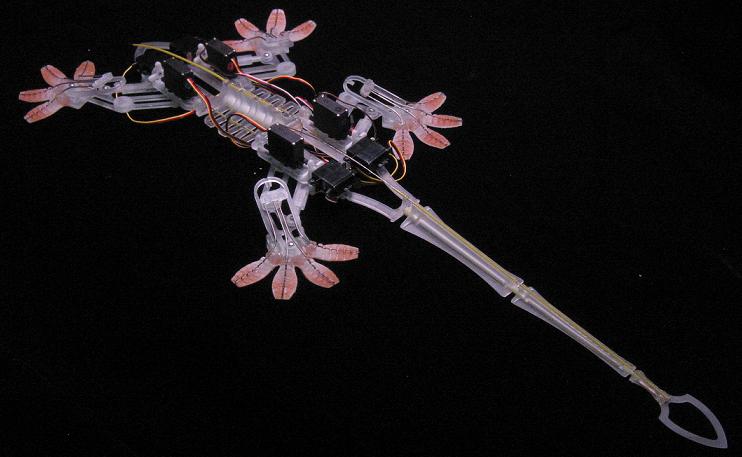

2. Bioinspired morphing & appendage repurposing

M4 draws in its design from animal locomotion strategies but the designs lean more to plasticity rather than mimicry. Its four-shrouded props double as wheels with articulated legs, providing two degrees of freedom in both frontal and sagittal planes, allowing transformations into unmanned aerial system, unmanned ground vehicle, and even thruster-assisted modes for steep inclines, akin to birds’ wing-assisted incline running. Each of the propeller-motor units generates about 2.2 kgf thrust, which translates to ~9 kgf in total, enabling payload scalability without sacrificing mobility.

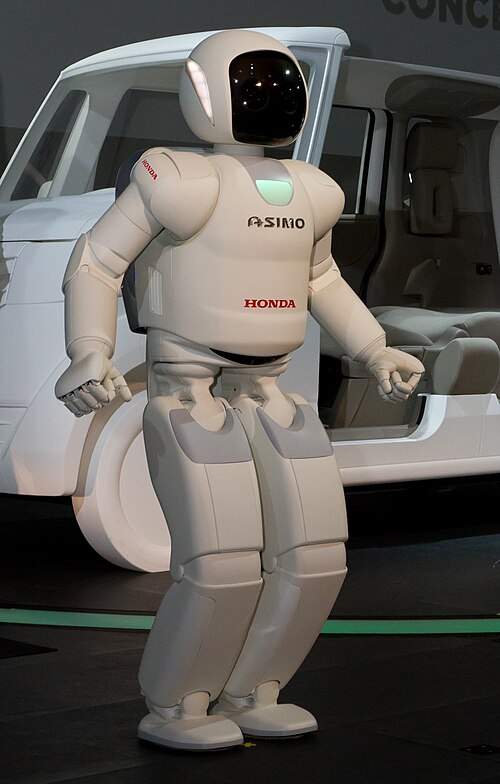

3. Physics-Based Learning for Humanoid Locomotion

While most humanoids get their gait from laboratory-captured human motion, the X1 has learned its via physics-based models. “The robot learns to walk as the physics dictate. It can walk on different terrain types, it can climb stairs, and importantly, it can do so while carrying the M4 on its back,” said Aaron Ames, director of CAST. This lets the humanoid make dynamic adjustments to respond to uneven surfaces, changing payloads, and environmental disturbances using machine learning.

4. Sensor Fusion & Terrain-Aware Navigation

Advanced sensor fusion algorithms meld Lidar, cameras, and range finders into the autonomy stack. A mix of Extended Kalman Filters with AI-driven perception for robust localization even in GPS-denied environments applies. Terrain-aware SLAM enables mapping and obstacle avoidance in cluttered disaster zones, while secure controllers like TII’s Saluki make onboard decision-making resilient against interference or cyber threats.

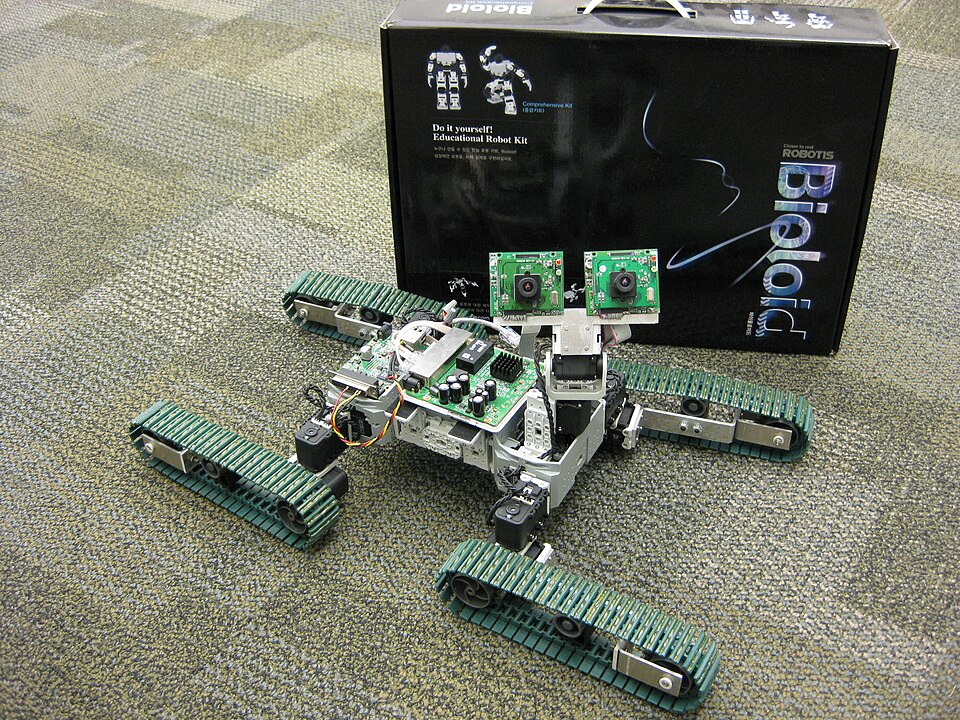

5. Adaptive Path Planning Across Modes

M4’s onboard computer runs a Multi-Modal PRM algorithm that discretizes environments into aerial and ground nodes. It performs an A* search to find the optimal routes for each mode based on energetic cost, taking into consideration transition costs between flying and rolling. In such a way, the robot can minimize energy consumption while maintaining mission speed-a factor of great importance in such operations as search-and-rescue.

6. Dynamic Morphing under Real-World Conditions

This morphing process is mechanically and algorithmically synchronized. Because of the change in angles of propellers during landing, the aerodynamic forces change; hence, real-time thrust needs to be compensated. In this manner, “dynamic wheel landings” can be performed on uneven terrain with good stability. On the ground, belt drives turn wheels, differential steering allowing for tight maneuvers. The transformation latency is very low; hence, mode switches are allowed without pausing the progress along the mission timeline.

7. Control Architecture for Disaster Environments

X1 can be equipped with heuristic obstacle-avoiding shortest path algorithms enhanced for dynamic hazards, such as robust motion planning and tracking systems for high-risk missions. Tube-based Model Predictive Control will maintain safety margins in case of perception errors or environmental disturbances. This architecture will enable real-time re-planning any time obstacles move in an unpredictable way, to make sure a robot remains crash-free in a cluttered, evolving disaster zone.

8. Applications in Emergency Response and Beyond

X1 brings triple mobility and autonomous functionality, aspiring to become the go-to candidate for such applications as urban search and rescue, military reconnaissance, infrastructure inspection, and autonomous logistics. In a collapsed building, for example, it can walk over debris, fly over gaps impassable by walking, and roll into confined spaces. In steep or obstructed terrain, thruster-assisted MIP mode allows for traction and reach unreachable by traditional robots. Its secure, adaptive autonomy is designed to enable deployment in an area where human control is not available or is very limited.

9. Reliability and Safety-Critical Autonomy

Claudio Tortorici of TII underlines trust: “We install different kinds of sensors-lidar, cameras, range finders-and we combine all these data to understand where the robot is, and the robot understands where it is to go from one point to another.” Safety-critical control, redundancy, and fail-safe mechanisms form part of future iterations; with features like that, robots such as the X1 would be dependable assets in public safety roles.

By marrying humanoid adaptability with morphing aerial-ground mobility, X1 represents a new frontier in autonomous robotics, where mode-switching is not a gimmick but a mission-critical capability that is engineered for the most exacting environments.