It began as a quiet revolution AI woven into daily routines, from writing emails to planning vacations. But behind every seamless interaction lies an industrial-scale operation of servers, cooling systems, and power plants, drawing on vast amounts of electricity and water. As AI becomes a constant companion, its environmental footprint is no longer invisible it’s measurable, and it’s growing fast.

1. Fossil Fuels at the Core of AI’s Power

Most of the computing done by AI is in data centers that remain dominated by fossil fuels. As Noman Bashir of the MIT Climate and Sustainability Consortium points out, “Since we’re building data centers at a rate where we can’t add more renewable sources of energy onto the grid, nearly all of the new data centres are fueled by fossil fuels.” The International Energy Agency estimates that in 2026 electricity consumed by data centres, cryptocurrency, and artificial intelligence will account for 4% of global energy demand each year the same as Japan’s overall electricity consumption.

2. The Jevons Paradox in the Age of Artificial Intelligence

Even while software and hardware become more efficient, the overall energy consumption of AI may still increase a contemporary rendition of the 19th-century Jevons Paradox. University of Maine’s Jon Ippolito sums it up as follows “The cheaper resources get, the more we tend to use them anyway.” This is affirmed by the accelerated growth of generative AI models, whose parameter and computational expenses increase with every iteration.

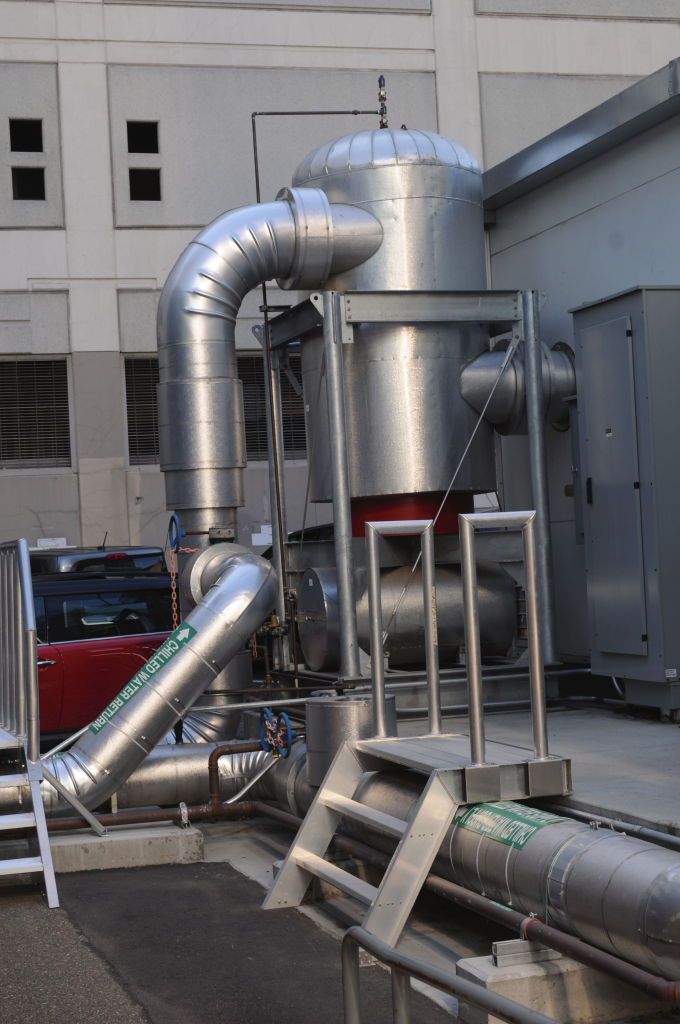

3. Cooling Demands and Water Stress

Heavy AI servers are cooled, a water-guzzling procedure. Mega data centers use as much as 5 million gallons of water daily, the equivalent of 50,000-town’s daily consumption. It takes about one bottle of water to cool a 100-word AI prompt, the University of California, Riverside researchers estimate. The choice of cooling technology evaporative air systems versus direct-to-chip or immersion cooling can determine whether a facility’s water usage is sustainable or a strain on local aquifers.

4. Measuring AI’s True Carbon Footprint

Determining the emissions from a single AI query is complex. Variables include model size, task complexity, and the carbon intensity of the grid at the time of processing. Sasha Luccioni of Hugging Face formulates it best, “We discovered in one of my studies that generating a high-definition image takes as much energy as half charging your phone.” For bigger models, the price is thousands of joules per response and extrapolated to billions of daily requests, the figures compete with the yearly consumption of electricity of entire cities.

5. Engineering Solutions for Energy Efficiency

Scientists are experimenting with ways to shrink the operational footprint of AI. MIT Lincoln Laboratory Supercomputing Center engineers apply “power capping,” limiting processors to 60–80% of maximum draw, reducing both energy usage and operating temperatures. They also use training speed estimation software to end low-potential model runs early, conserving up to 80% of compute time without a reduction in accuracy. Carbon-smart scheduling platforms such as Clover can relocate non-emergency workloads to cleaner hours and geographies, decreasing carbon intensity by up to 90%.

6. Integration of Renewables and Grid Challenges

Transitioning AI data centers to renewable energy is a priority, but the scale of demand complicates the shift. While companies pledge to triple nuclear capacity by 2050 and expand wind and solar, AI’s 24/7 load profile means it often relies on natural gas or coal during renewable gaps. In Virginia, the state with the highest concentration of data centers, natural gas accounts for over half of electricity generation, locking in higher carbon intensity.

7. Water-Saving Cooling Technologies

New cooling technologies can similarly reduce the use of water. Closed-loop technologies recycle water multiple times, and freshwater intake can be reduced by as much as 70%. Immersion cooling, in which servers are cooled by non-conductive fluids, reduces water requirements and raises thermal efficiency. Coupling with renewable-powered operation creates a double advantage reducing carbon as well as water use.

8. Practical Steps for Users and Developers

Personal decisions can also decrease the environment footprint of AI. Streamlining requests, excluding extra AI features, and executing local models rather than cloud models all decrease energy consumption. As Marissa Loewen has described, the earliest to implement “Having local on your computer in your home enables you to also control your use of the electricity and consumption.” Designers can further optimize by developing AI algorithms for efficiency, recycling hardware components, and making environmental performance data publicly available.

The cost of AI to the environment is not a mystical future threat but is present in every search, image, and video generated today. The engineering problem today is one of matching the runaway increase in AI with similarly dramatic increase in sustainable infrastructure, energy procurement, and water systems.