The warning came with a sense of unusual urgency: “If you want a device, you better buy it now,” stated Avril Wu, a senior research vice president with TrendForce. Also, she advises in light of the growing crisis in the global semiconductor market, AI’s growing demand for memory chips has led to a global shortage, with prices for the technological solutions of the future set to escalate in the coming months because of the growing scarcity of memory chips, with the constraints estimated to last through to 2027.

1. AI Data Centers Creating Unprecedented Memory-Buildup Loads

AI workloads inherently consume large amounts of memory. The memory requirements for training and inference are large, persistent, and extreme, with close proximity to the processors performing the calculations for the AI computations, as opposed to the relatively low 16 GB found on a laptop computer, with the average AI server using 200 GB or more, as opposed to the AI data centers with state-of-the-art GPUs and large amounts of DRAM and high-bandwidth memory (HBM). The AI data centers, as explained by Sanchit Vir Gogia, co-founder and principal analyst for Greyhound Research, “cannot dial this back without breaking performance.”

2. High-Bandwidth Memory and DRAM at the Core of the Shortage

The most urgent demand is for DRAM, especially for its high-end versions such as DDR5 and HBM memory. Companies such as Samsung, SK Hynix, and Micron are racing against each other to develop HBM memory stacks for Nvidia GPUs, with each stack consisting of multiple DRAM dies stacked vertically for ultra-high-speed performance. The distribution of wafer production capacity to HBM cuts down directly on the production of general-purpose DRAM, which goes into PCs and smartphones. An imbalance of even 3% leads to prices skyrocketing, and this gap is being breached rapidly.

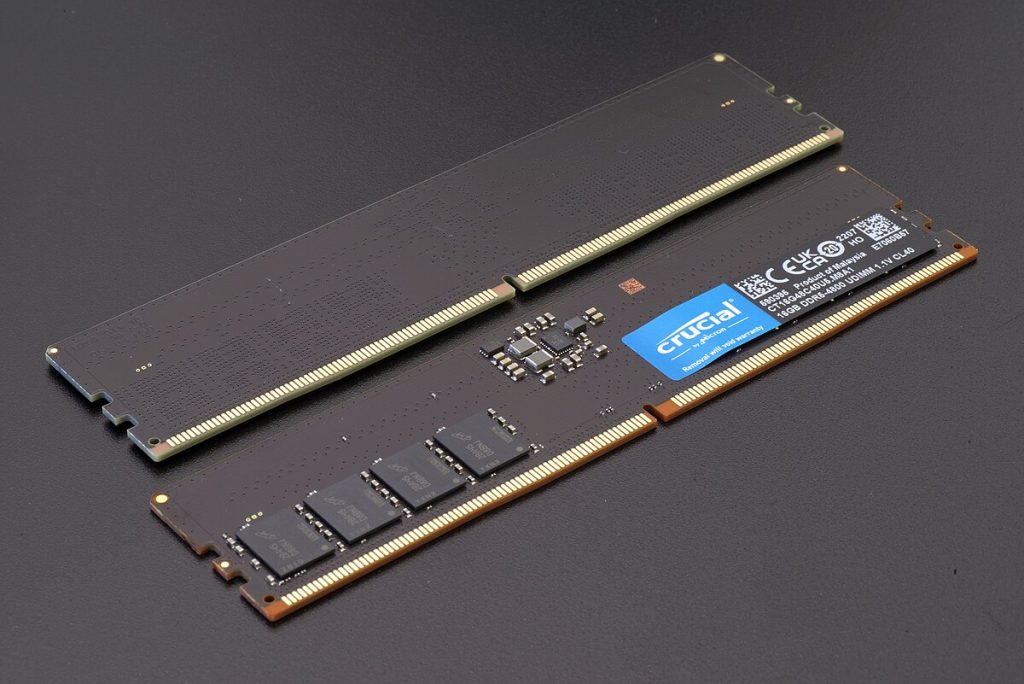

3. Companies Diversifying or Pivoting Away from Consumer

Micron’s move to discontinue its consumer brand, Crucial, is another indication of the shift in focus. According to Willy Shih, a Harvard professor, “They can make a lot more profit” catering to the AI data centers market than the consumer market. The implication here is a paradigm shift from previous long-held priorities. According to IDC, each wafer used for the HBM stack for the Nvidia GPU is a wafer that is denied for LPDDR5X modules in smartphones and/or SSDs for laptops.

4. Capacity Constraints Until New Fabs Come Online

The existing infrastructure will be driven to its limit by the end of 2026. The new, high-volume fab producing DRAM in Boise, Idaho, will not start production until 2027, although there is planned investment of $200 billion in the U.S. in Idaho, New York, and Virginia. This equipment will include advanced HBM packaging solutions for the AI market, although until these are in production, supply growth will be below historical levels, and the IDC expects only 16% year-over-year growth in 2026 for DRAM supply.

5. Price Shock in Consumers and Enterprise Devices

The deficit is already evident in the retail market. A 32 GB DDR5 memory kit that retailed for under $130 in October will now cost consumers around $400. Industry market research firm TrendForce reports that DRAM prices are already up 50% from the previous quarter and are set to jump another 40% in the next quarter. For the smartphone industry, memory components contribute 15 to 20% to the bill of materials in mid-range handsets. The steep price jump will necessitate price increases, reduced configurations, or both for these devices. A medium downside forecast by IDC expects higher ASPs for smartphones of 3 to 5% in 2026, along with declines of as much as 2.9% in shipment volumes.

6. RAM’s Effect on the PC Industry and Adoption of AI-PCs

The PC market is experiencing a perfect storm: the refresh cycle with Windows 10 at the end-of-life and the AI-PC push. AI PCs, with NPUs, start at 16 GB of RAM and are migrating to 32 GB in the high-end models. The lack of memory would make such models unaffordable, thus compromising downgrades in the worst possible scenario. The worst-case forecast by IDC projects the PC market to shrink by 8.9% in the PC market in 2026, with ASPs increasing to as much as 8%.

7. Supply Chain Bottlenecks Beyond Memory

The AI boom is also stretching other semiconductor types. Nvidia’s move to LPDDR memory, which has conventionally been used only in top smartphones, introduces another big player in the competition for scarce memory. Storage devices are being squeezed with hyperscalers lining up to move to SSDs from HDDs. Foundries TSMC plans to increase prices of sub-5 nm technology by 10%, which will add to the burden of AI applications.

8. Strategic Responses and Market Resilience

The major OEMs with supply contracts like Apple and Samsung will be systematically hedged against shortages in the near term but are likely to lock down upgrades on RAM. The most affected would be the smaller manufacturers and the home PC enthusiasts. There are industry suggestions on how to avoid allocation risks related to suppliers: this would include diversification of suppliers, safety stocks, and monitoring of materials.

The semiconductor market is remapping itself to meet AI’s insatiable appetite, and this will completely redefine markets for both consumers and businesses. The next couple of years have inherent limitations built into them, which means the days of cheap memory are over for now.