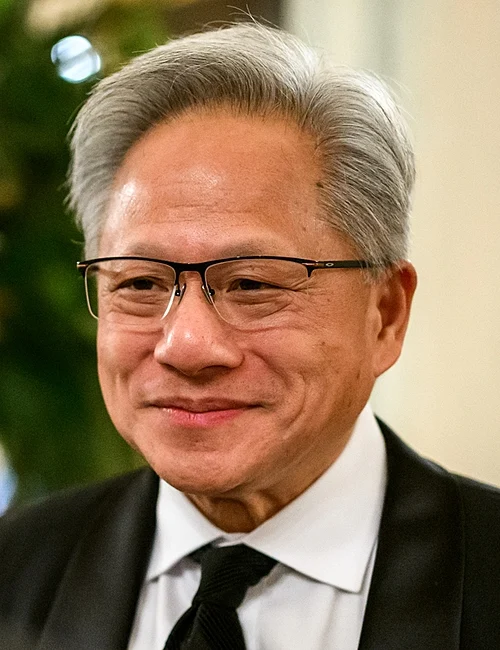

Nvidia CEO Jensen Huang summarized the AI competition at one point in terms that Nvidia dubbed “a five layer cake”: energy, chips, infrastructure, models, and applications. When Nvidia CEO Jensen Huang summarized the AI competition at one point in terms that Nvidia dubbed “a five layer cake” consisting of energy, chips, infrastructure, models, and applications, his assessment was direct and to the point: “China has twice as much energy as we have as a nation.”

1. Energy and the Basis Layer

Huang’s discussion about the need for energy capacity is an acknowledgment of the cruel fact that AI is an energy-intensive technology. This is what the current generation of AI data centers-“AI factories”- entail: unprecedented power consumption. The power requirements of the new data centers being erected in the US are expected to exceed 300 MW each, with individual instances surpassing 1 GW, city-scale power consumption accomplished within two to three years. China has more than double the installed electricity generating capacity of America, with an increase described as “straight up,” while the US is flat.

2. AI Energy Nexus & Resource Strain

The intersection between AI and energy is connected with electricity consumption, water, critical materials, and the effect on the environment. The act of training cutting-edge AI models may consume tens of megawatts during run times, analogous to the energy required to power the whole country for a year. The operation or use, known as inference, consumes up to ten times more energy than a typical search engine query, especially if it is multimodal with hundreds of billions-of-params models. The Chinese advantage here is their capacity to increase their power production with no permitting processes that cause setbacks in US power grid expansion.

3. America’s Semiconductor Advantage and Risks

Though Huang stressed that the US is “standing generations ahead” in the aspect of AI chips, this superiority is in no way assured. Semiconductors can be viewed as a manufacturing technique, and one should not underestimate the manufacturing capability of the Chinese government. The US is dominating the most important high-added value semiconductor design and manufacturing expertise, but export control policies have already prompted Chinese reactions so far in the areas of export control of rare earths and the search for alternative supplies.

4. Velocity Gap of Infrastructure

In the US, the time frame from the moment of its groundbreaking to the point of becoming operational to construct a hypscape AI Data Center is three years. According to Huang, in China, “They can build a hospital over the weekend.” Similarly, China also uses the same fast-track approach to construct industrial-scale energy and factories. For the AI, the need for fast-track deployment to stay competitive cannot be underestimated. According to Raul Martynek of DataBank, the US may spend between $50 to $105 billion to develop its data center operations, but if not on the same fast-track approach as China, the US risks falling behind.

5. AI Resources Infrastructure: Engineering Challenges

The US will also need an additional 75-100 GW of generating resources by 2030 in order to meet the demand driven by artificial intelligence. This demand for gas-fired generation will require a proportionate 10-20% increase in domestic gas production. Nuclear fission, fusion, and large-scale renewable resources arent as immediate a solution due to challenges in their value chains. China also has a superior opportunity-intelligence ratio, as it can quickly mesh newly available resources within its infrastructure.

6. Efficiency versus Brute-Force Scaling

Critics such as Michael Burry point out the problem with Nvidia’s strategy: “Their roadmap is essentially a power consumption roadmap. It’sMission: Impossible trying to get the industry to consume more and more power.” Michael Burry urges a shift towards AI-optimized ASICs. This could ease the pressure on energy consumption due to more efficient designs of chips and AI models. Perhaps the drive for speed will trump economy, at least in light of abundant supply sources of energy, such as in China.

7. Strategic Energy Investments and Geopolitical Leverage

The insatiable demand for reliable energy is being reshaped by the immense appetite of AI. American technology titans are acquiring separate nuclear fusion stations, as well as developing fusion companies in a quest to ensure competitive advantages in their AI programs. The Chinese “East data, West compute” policy aims to support data centers with 80 percent non-carbon energy sources by 2025. However, the use of nuclear energy is a sensitive political issue in the country.

8. Grid Modernization and Cybersecurity Threats

The upgrade of grids to handle loads of an AI-type added cyber risks. While smarter metering and grid improvement systems enhance efficiency, they present increased risks of cyber attacks. The revelation of “kill switch” elements within US critical infrastructure, tracing back to China, highlighted dual risks. In a game of energy security, therefore, the strength of a grid is as important as its power output.

9. Competitive Multiplier through Standardization

The standardization of AI and clean energy can provide a competitive lock-in. The US and China are each seeking to dominate standardization organizations because they understand that technology standards drive innovation ecosystems in their entirety. In the race to develop fusion energy, Chinese corporations are already attempting to standardize on the kinds of designs they’ve heavily invested in, and American corporations are seeking proprietary dominance in the way Tesla covered the charging infrastructure standard.

The AI race must now factor in infrastructure building, powering, and securing at scale and speed, because in this regard, the algorithmic race has already diversified into semiconductor dominance, which, as Huang points out, will amount to very little without underpinning infrastructure.