Elon Musk’s stark warning that “AI is the highest ELO battle ever” captured the intensity of a hardware race where speed, scale, and cost efficiency will determine the victors. For investor Gavin Baker, though, the focus came into even sharper view: at full deployment, Nvidia’s next‑generation Blackwell chips could flip the economics of AI compute, threatening Google’s current low‑cost dominance.

1. Blackwell Transition: Engineering Complexity Meets Market Stakes

Baker said the transition from Hopper to Blackwell “is by far the most complex product transition we’ve ever gone through in technology.” In re-designing its products, Nvidia needed more power, liquid cooling, heavier racks and more sophisticated heat management-all factors that delayed deployment timelines by months. Those delays opened an opening for Google to position its Tensor Processing Units, or TPUs, as the lowest-cost way to compute AI workloads.

2. Google TPU Economics: Four- to Six-Fold Cost Advantage

Google’s vertically integrated TPU approach avoids the “Nvidia tax”-the enormous premiums hyperscalers pay for Nvidia GPUs. Estimates from analysts have Google’s AI compute costs at roughly 20% of what rivals pay through high‑end Nvidia hardware for a 4x–6x cost efficiency advantage per unit of compute. This, therefore, allows Google to offer very aggressive pricing-for instance, Gemini 2.5 Pro at $10 versus OpenAI’s $40 for o3.

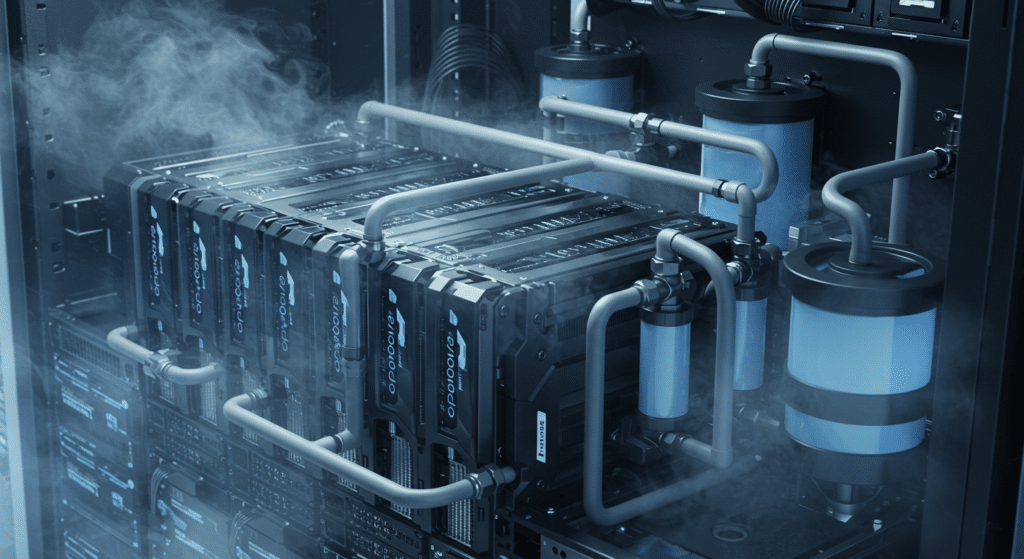

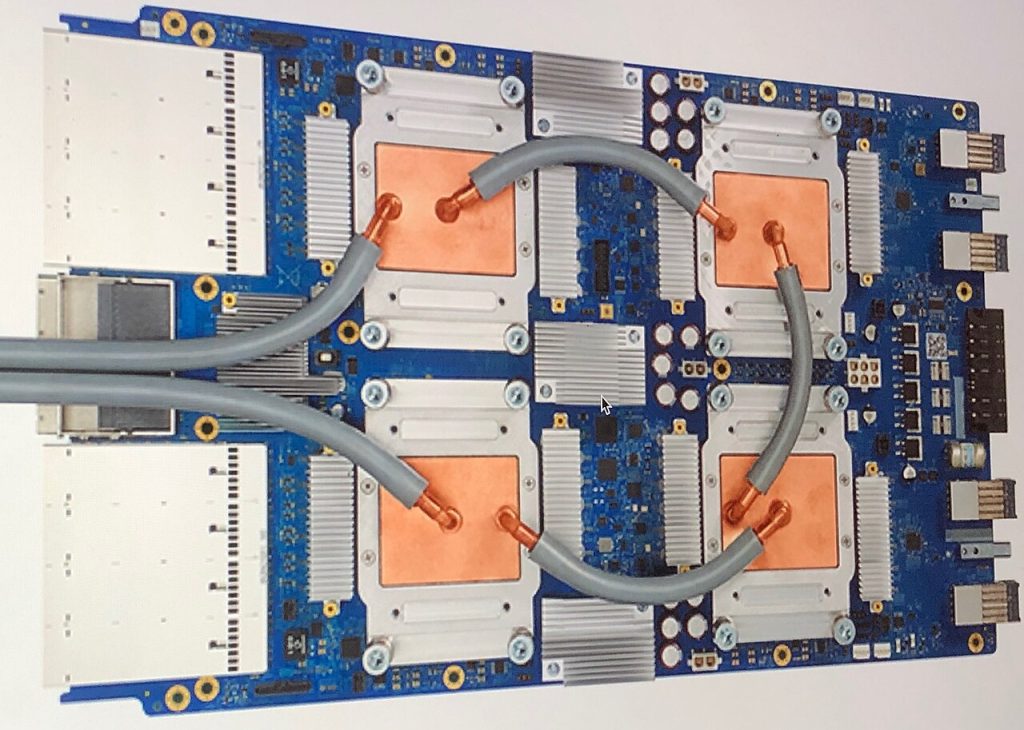

3. Thermal Management: Liquid Cooling as a Competitive Weapon

The systems would feature rack-scale liquid cooling at 120 kW+ per rack in the GB200 NVL72 and the GB300 NVL72. This architecture facilitates energy efficiency up to 25x and water efficiency as high as 300x for conventional air-cooled systems and would save millions annually in cooling costs for hyperscale deployments. The advantages of direct-to-chip heat transfer in liquid cooling enable operation at a warmer water temperature, hugely reducing the demand on mechanical chillers and shrinking environmental impact.

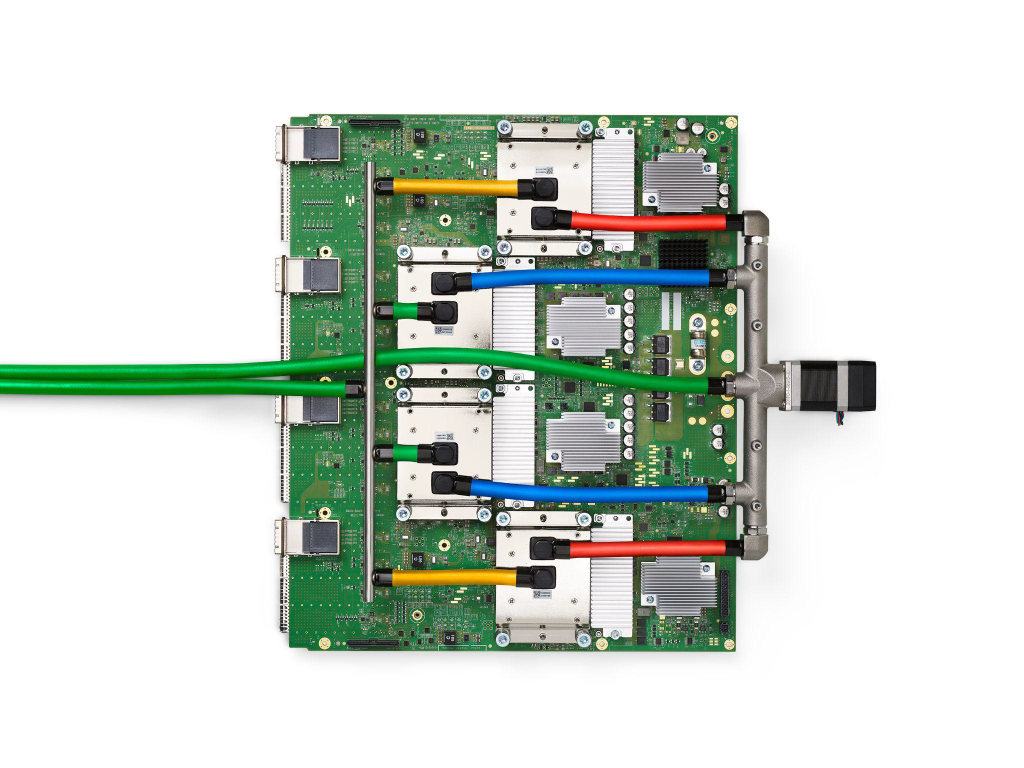

4. Nvidia MGX: Modular Architecture for Rapid AI Factory Deployment

The MGX reference design from Nvidia can address heterogeneous workloads, pre-integrating up to 80% of the components at the factory to reduce deployment timelines from 12 months down to less than 90 days. Their MGX racks combine Grace CPUs with Blackwell GPUs connected via NVLink switch trays, driving coherent 72-GPU domains for trillion-parameter model training. This is achieved by modularization that allows a good degree of future-proofing with Rubin and other architectures arriving.

5. Market Impact: Stocks of TPU Supply Chain Soar

The thought of big TPU deployments has taken stocks of those aligned with Google’s ecosystem-such as Broadcom, Celestica, and TTM Technologies-much further than Nvidia-aligned peers. A further 1 million TPUs in 2026 could add US$10 billion to Broadcom revenue, US$800 million to TTM Technologies revenue, and US$500 million to Celestica revenue. Forecasts for 3 million TPUs in 2026, 5 million in 2027, and 7 million in 2028 underpin the bullish sentiment in this tight supplier group.

6. Meta’s Potential Shift: Strategic Realignment in AI Hardware

Notably, Meta is in talks with Google Cloud to rent TPU capacity as early as 2026 and to deploy TPUs in their facilities by 2027. That would divert billions of dollars from Nvidia’s GPU sales, validating Google’s effort to commercialize TPUs beyond its own infrastructure. Internally, executives inside Google Cloud have said that this could snatch up to 10% of Nvidia’s annual revenues.

7. The Looming 2026 Inflection Point

Baker predicts the first meaningful AI models, trained on Blackwell chips, will be announced early in 2026, with Musk’s xAI potentially among the very first meaningful deployments. New GB300 systems are engineered for “drop‑in compatibility” so that older infrastructure can be swapped out quickly. If Nvidia’s cost per token is below Google’s, the latter may well be compelled to abandon its low‑cost strategy, putting pressure on margins and changing competitive behavior across the AI sector.

8. Geopolitics and Supply Chain Resilience

Export controls on high‑performance chips like Nvidia’s H100 and B100 limit access in China and elsewhere, which drives relocation of AI training to compliant jurisdictions. The AI chip war now intersects with global supply chain strategy, imposing a new requirement for multi‑silicon resilience: workloads that can run across Nvidia GPUs, Google TPUs, AWS Trainium, among other architectures to mitigate political and supply risks.

The next two years will show if Google’s TPU advantage will hold strong with Nvidia’s Blackwell-driven cost inversion and if hyperscale’s can adapt infrastructure quickly enough to keep up with economic advantages of this accelerating “highest ELO battle ever”.