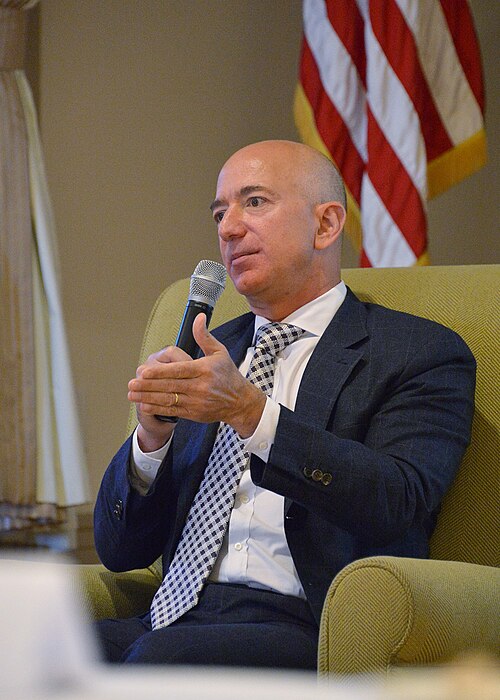

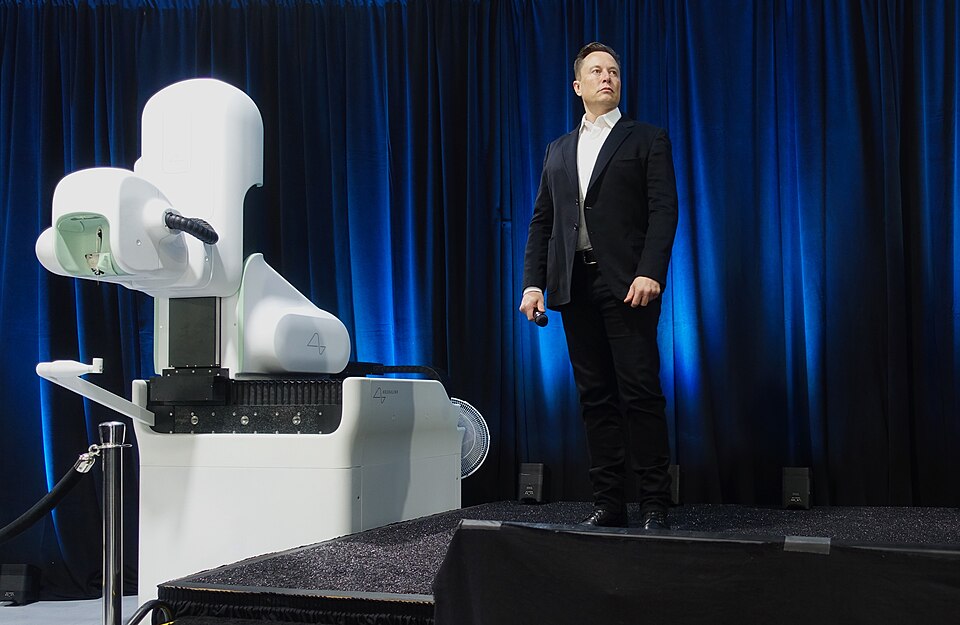

It’s been said that “space is the final frontier.” For Jeff Bezos and Elon Musk, it’s apparently also the next server farm location. The two billionaire founders-Blue Origin’s Bezos and SpaceX’s Musk-are now locked in a high-stakes race to build artificial intelligence data centers in orbit, a contest that mingles the raw ambition of the commercial space race with the insatiable compute demands of the AI boom.

1. Billionaire Rivalry Moves Off-Planet

If the reports are to be believed, Bezos’ Blue Origin has been working in stealth mode since late 2023 on developing orbital data center technology, while Musk’s SpaceX plans upgraded Starlink satellites that will be capable of hosting AI workloads. Bezos has also publicly predicted gigawatt-scale space data centers within 10 to 20 years. “These giant training clusters will be better built in space, because we have solar power there, 24/7. There are no clouds and no rain, no weather.” With its constellation of over 6,000 satellites, SpaceX may accelerate deployment by retrofitting rather than building afresh.

2. Why AI Compute Is Heading to Orbit

Unprecedented demand for compute power is being unleashed by the rapid growth of the AI sector. Indeed, Goldman Sachs Research estimates AI’s share of data center workloads will double to 30% over the next two years, while the use of electricity by data centers globally will increase 175% from its 2023 level by 2030. Already, data centers in the United States consume around 4.4% of the country’s total electricity-this is set to increase to as high as 12% by 2028. Orbital facilities promise relief from terrestrial energy constraints by tapping uninterrupted solar power and bypassing cooling demands that strain local grids.

3. The Solar Advantage in Space

Solar panels in orbit may receive up to eight times more energy than their terrestrial counterparts, while sun-synchronous orbits enable near-constant generation. Project Suncatcher, another Google effort running in parallel within the same domain, has constellations of solar-powered satellites with Tensor Processing Units and interconnected by free-space optical links. Travis Beals of the Paradigms of Intelligence unit at Google said that the space-based data center could eventually reach parity with its terrestrial peers on a per-kilowatt/year operating cost basis.

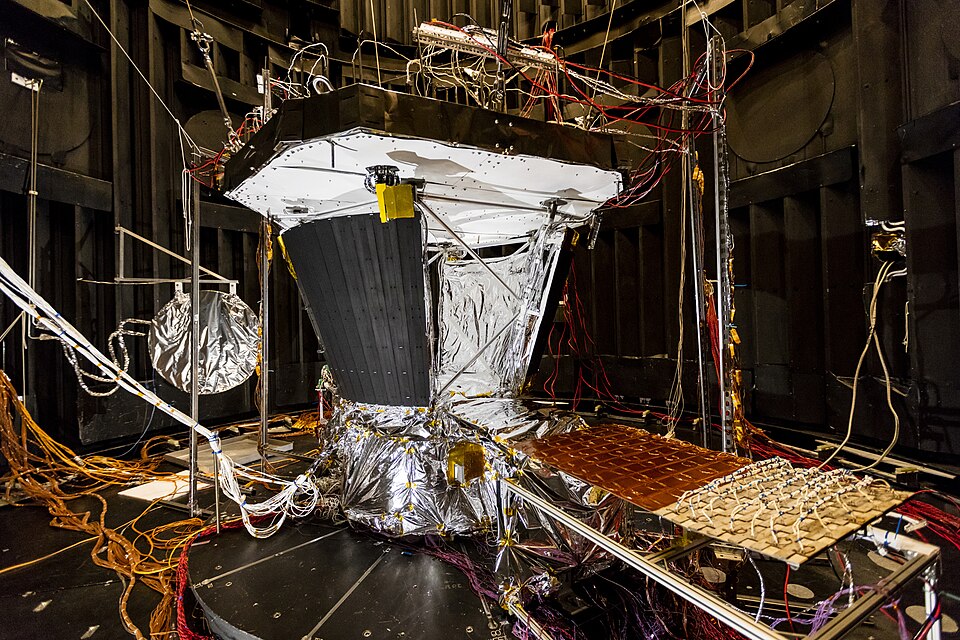

4. Engineering Around the Vacuum

Operating servers in space introduces special challenges. Without atmospheric convection, heat dissipation requires radiative cooling systems integrated directly into the structures of satellites. Sensitive components such as high-bandwidth memory in AI accelerators must be protected by radiation shielding; tests conducted by Google indicate that such components can work for several years in orbit. Mechanical designs may need to incorporate solar collection, compute modules, and thermal control in close integration to achieve gigawatt-scale deployments.

5. High-Bandwidth Inter-Satellite Networking

Large-scale AI workloads require tens of terabits per second in interconnect bandwidth. Google research indicates that multi-channel DWDM transceivers and spatial multiplexing can achieve this-if satellites fly in formations just hundreds of meters apart. Bench-scale demonstrations have already reached 1.6 Tbps total throughput using a single transceiver pair. Maintaining such tight formations requires precise station-keeping maneuvers, modeled using Hill-Clohessy-Wiltshire equations and refined with differentiable orbital dynamics simulations.

6. Economics of Launch and Deployment

The current launch costs are around US$1,500 per kilogram on a SpaceX Falcon Heavy. Google estimates this could drop to about US$200/kg by 2035, making orbital data centers cost-competitive with Earth-based ones. Critical here are both Blue Origin and SpaceX’s reusable rocket technologies, with the frequency of hardware refreshes possible and per-launch expenses lower. As the AI accelerators evolve, module swapping in orbit could turn into a decisive advantage.

7. Environmental and Grid Impact

The International Energy Agency warns that data centers could account for as much as 12% of global electricity demand growth by 2030, with AI as the main driver. Already, data centers consume 26% of local electricity supplies in places like Virginia and a whopping 79% in Dublin. Space-based compute could lower terrestrial carbon emissionsby a factor of ten, according to Googlewhile freeing up grid capacity for other electrification priorities. However, nearly 60% of today’s data centers are still powered by fossil fuels, and the orbital facilities will offset emissions only if their launch and operational footprints remain low.

8. Strategic and Regulatory Dimensions

Beyond pure engineering, orbital AI data centers raise questions about spectrum allocation, orbital debris management, and data sovereignty. Denser satellite constellations run the risk of collision, requiring sophisticated autonomous navigation. Individual nations may also consider space-based AI computing as part of their strategic infrastructure, accelerating the timeline with public-private partnerships. The potential for gigawatt-scale orbital clusters opens up new vistas for not just AI research but also military, environmental, and industrial uses.

But the Bezos-Musk contest for orbital AI infrastructure is more than about vanity; it’s a convergence of the commercialization of space, the scaling limits of AI, and energy sustainability imperatives. In this field, prototypes such as Google’s 2027 TPUs-in-orbit mission are poised to validate core technologies, after which the race is entering a decisive phase where physics, economics, and ambition intersect hundreds of kilometers above Earth.