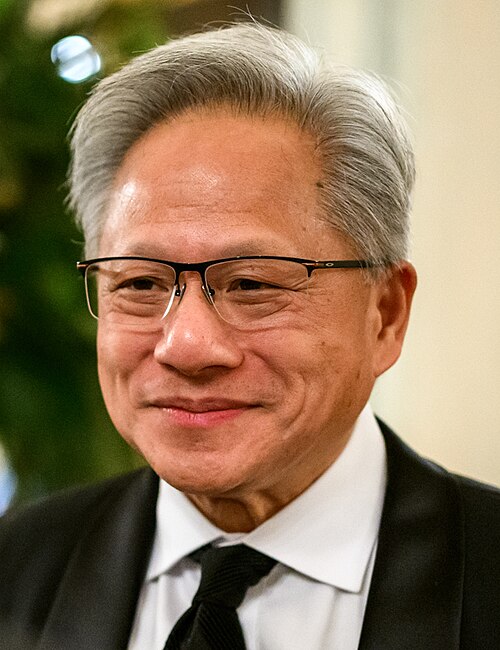

“How long can a nation lead in AI if it cannot build the infrastructure fast enough to run it? Nvidia CEO Jensen Huang warns bluntly that the United States risks losing its edge to China, not because chip design-in which the US remains “generations ahead”-but because of longer construction cycles and limited energy capacity.

1. The Velocity Deficit

In Huang’s comparative analysis, the contrast is striking: “If you want to build a data center here in the United States, from breaking ground to standing up an AI supercomputer is probably about three years. China can build a hospital in a weekend.” He said this to John Hamre of the Centre for Strategic and International Studies. The same mobilization of resources at speed is not unique to healthcare but crosses into the AI-scale infrastructure, where rapid deployment can safeguard first-mover advantage in competitive markets. In the US, multi-year lead times risk misalignment with the pace of AI model evolution, forcing executives to plan around infrastructure timelines that lag technological change.

2. Energy Capacity: A Strategic Constraint

Another point Huang brought up was the issue of national energy availability. “China has twice as much energy as we have as a nation, and our economy is larger than theirs,” he said. China’s capacity continues to shoot upward while US growth remains flat. This disparity is important because AI data centers are measured in terms of megawatts, not terabytes. Several facilities currently under construction have city-scale power demands of more than 1 GW. Without predictable, multi-gigawatt commitments, even the most advanced chips cannot be fully utilized.

3. China’s Position of Abundance

Chinese energy expert David Fishman described a system built for over-capacity. Reserve margins of 80-100% mean that the country maintains at least double the capacity it needs. AI data centers are looked at as a way to “soak up oversupply,” not as a grid stability threat. By comparison, US grids often operate at 15% margins, issuing red-flag warnings when demand peaks. China’s coordinated technocratic energy planning builds generation and transmission well ahead of demand, while US projects face fragmented market rules, years-long permitting, and investor expectations of three-to-five year returns.

4. The Power Crunch in US Hyperscale Build-Out

Microsoft CEO Satya Nadella summed up the bottleneck: “The biggest issue we are now having is not a compute glut, but it’s power.” Interconnection queues can be as long as eight years in PJM territory, home to “data center alley” in Virginia. Large transformer shortages have tripled lead times since 2020, while natural gas turbines have doubled to 4.5 years for delivery. The result: hyperscalers turn to behind-the-meter generation, such as OpenAI’s Stargate facility in Texas with 361 MW of on-site gas turbines, to meet deadlines.

5. Engineering for Scale and Density

These next-generation AI supercomputers are driving an unprecedented requirement for rack densities and associated cooling performance. Facilities are adopting direct-to-chip liquid cooling or immersion systems to manage the thermal load derived from hundreds of thousands of GPUs. The power delivery systems need to support rack loads that can exceed 100 kW, driving innovation into busway design and redundant electrical feeds. Indeed, such engineering advancements will become the norm to sustain compute density without sacrificing uptime.

6. Grid Modernization and Renewable Integration

Meeting AI-scale loads will require both traditional and renewable generation: The Department of Energy estimates 75-100 GW of new capacity by 2030-with natural gas meeting 60% of near-term growth. At the same time, renewables are forecasted to comprise 45-50% of the mix by 2030 from one-third today. Modernizing the grid faces obstacles: The transmission infrastructure will need further development to keep up, deployment of grid-scale batteries will be required, and distributed generation will need integration-all in a time when supply chains are constrained and regulatory bodies maintain their inertia. According to Grid Strategies, delays could force costly curtailment or onsite generation.

7. Semiconductor Leadership Under Pressure

While the US leads in AI chip design-with Nvidia “generations ahead” in GPU architecture, according to Huang-caution is required: “Anybody who thinks China can’t manufacture is missing a big idea.” Another possible chokepoint is semiconductor fabrication capacity. McKinsey estimates technology developers and designers will need $3.1 trillion in capital expenditure by 2030 to meet AI demand. Until supply chain diversification is achieved or domestic fabs are built, bottlenecks will impede the chip advantage.

8. Capital Deployment at Historic Scale

DataBank CEO Raul Martynek expects 5 to 7 GW of new US data center capacity in the coming year alone – only at $10 to $15 million per MW, meaning $50 to $105 billion annual spend. McKinsey’s global forecast is far more staggering: $5.2 trillion in AI-related data center investment by 2030, builders, energizers, and technology developers being the primary capital drivers. And yet, still, CEOs are cautious given the uncertainty over long-term demand and return on investment. It follows, therefore, that staged investment strategies will be necessary.

9. Deployment Lag and Competitive Risk

The trade-offs are sharply defined: technological leadership in chips simply does not translate to AI leadership if infrastructure deployment is lagging. Given its rapid build cycles and plenty of energy, China can scale AI workloads with no delay whatsoever. And in a race where compute power is the currency, delays in US construction and grid upgrades could erode strategic advantage – leaving executives weighing the risks of underbuilding with the costs of stranded assets.