“OpenAI’s Sam Altman is surely not satisfied with controlling AI technology on Earth, and moreover, he now wants to expand his operations into space. Altman has explored buying or partnering with rocket companies to build AI data centers in space itself, which could further change how AI infrastructure and space economics work. We are seeing plans for space computers that run only on sun power, not Mars trips like Elon Musk wants to do. These space stations will work without Earth’s power problems and will not harm our environment.

1. The Orbital Compute Vision

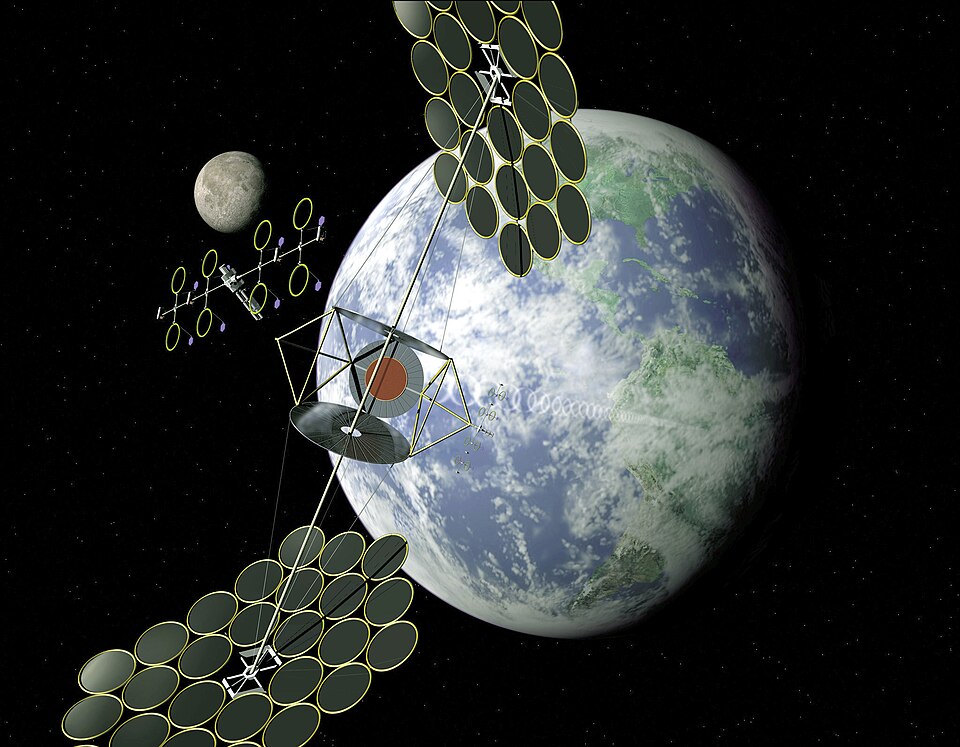

Altman’s idea surely depends on the special benefits of building infrastructure in space. Moreover, this approach relies on advantages that can only be found outside Earth’s atmosphere. We are seeing that solar panels in space can make eight times more power than the ones on ground only, because they get sunlight all the time in special orbits. As per orbital conditions, these AI centers can work non-stop regarding no atmospheric problems or day-night changes, so no need for big battery systems. Heat removal, which is surely the biggest engineering problem for data centers on Earth, would be handled by the cold vacuum of space. Moreover, this would reduce both cooling costs and harm to the environment.

2. The Stoke Space Negotiations

Moreover, as per reports, Altman started talks with Stoke Space regarding their Nova rocket project, which is a startup making fully reusable rockets. Stoke was actually started by engineers who left Blue Origin, and their reusable rockets will definitely make it cheaper to send things to space many times – which is really important for building computer systems that work in orbit. Basically, Altman suggested investing billions of dollars to get controlling ownership, so OpenAI would have the same complete control over its launch operations. We are seeing that OpenAI is thinking seriously about making everything from rockets to space computers, even though they did not finalize any deal only.

3. Financial Pressure and Strategic Scale

We are seeing OpenAI making huge expansion plans with nearly $600 billion set aside for new data centers only, which is much bigger than their expected $13 billion yearly earnings. The Stargate project surely requires an $18 billion investment for building facilities on Earth. Moreover, this represents a major commitment to developing ground-based infrastructure. Further, we are seeing AI computers using more and more electricity every year – they used 76 TWh in 2024 only – so putting data centers in space could help us avoid power problems and changing energy costs in the long run. The initial capital needed for space infrastructure itself is very large, and further returns take many decades to come.

4. Google’s Rival Play: Project Suncatcher

As per current developments, Google’s Project Suncatcher is the most advanced public project regarding AI computing in space orbit. We are seeing a plan for 81 satellites that will have special computer chips and connect to each other using light beams at very high speeds. These satellites will only use optical links to send data between them. Google can actually fly their devices close together to definitely save power while keeping the connection strong. As per the high density in space, collision risks increase in crowded orbits, regarding which precise station-keeping and automatic debris avoidance systems are needed.

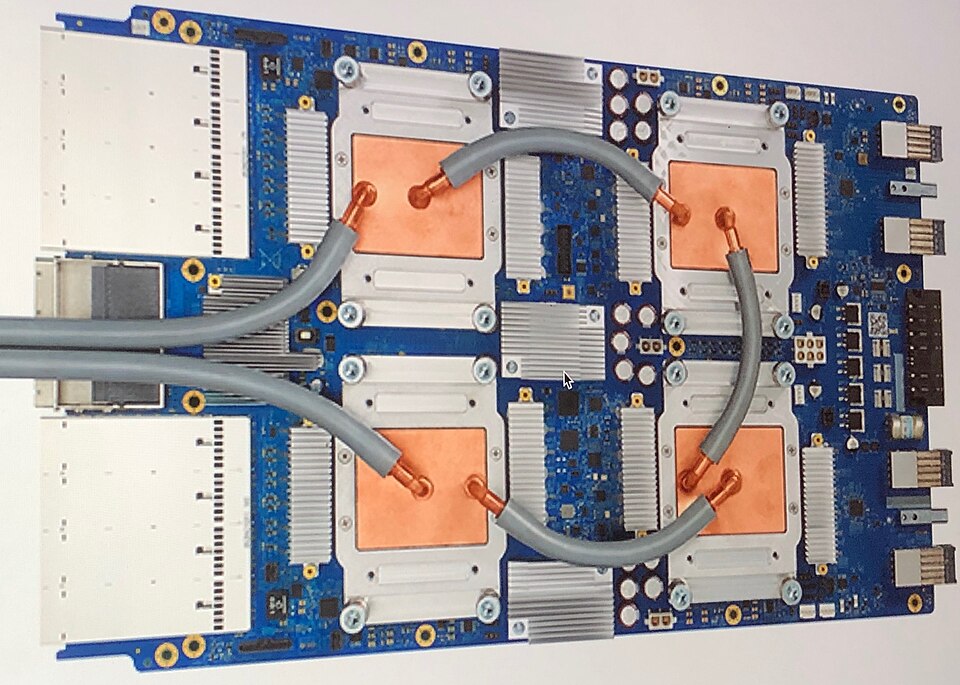

5. Engineering Challenges: Radiation, Thermal Management, and Bandwidth

Further, we are seeing that radiation hardening is only essential for AI hardware operating in space orbits. We are seeing that Google’s tests on its Trillium TPU show that only sensitive HBM memory can survive many years in space orbit. Further, basically, managing heat in space needs integrated designs where solar panels, computers, and heat removal systems work together, even though the vacuum helps with cooling the same way. Moreover, satellite bandwidth must surely match the standards of ground data centers, and Google’s small-scale test achieved 1.6 Tbps using special transceivers. Moreover, scaling this technology in space requires extremely precise satellite positioning and formation flying.

6. Reusable Rocket Economics

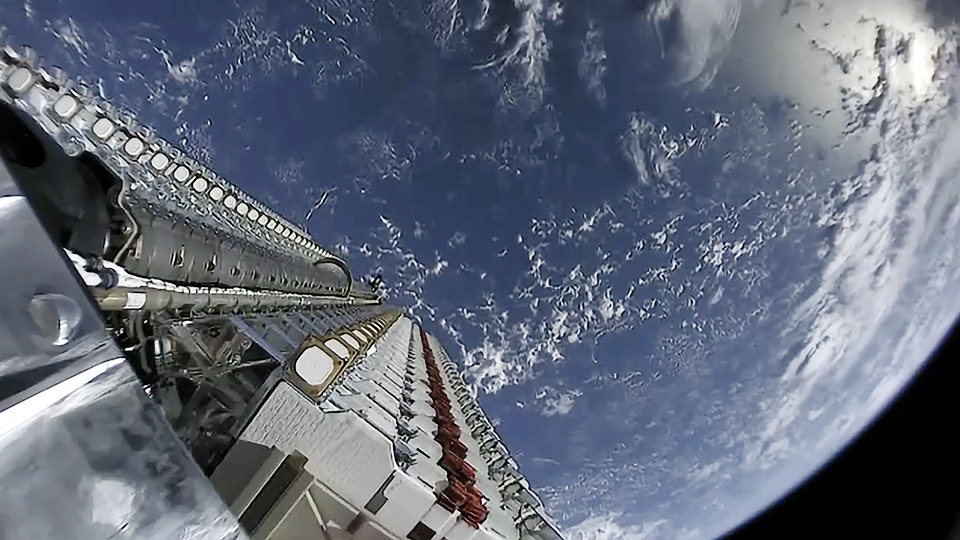

Altman’s plan actually depends on launch vehicles that can be used again and again. These reusable rockets are definitely the most important part of his vision. As per current data, Stoke’s Nova and SpaceX’s Falcon 9/Starship rockets cut down launch costs per kilogram much more than rockets that get thrown away after one use. Regarding cost savings, these reusable rockets make space launches much cheaper. We are seeing that many rocket launches will be needed not only to put the first satellite groups in space but also to change old satellites with new ones over time. We are seeing that reusing space equipment quickly with only small repairs could make AI systems in space profitable by the 2030s, which matches what Google thinks is possible.

7. The Space Debris Threat

Moreover, any big group of satellites in low Earth orbit actually faces the danger of Kessler syndromea chain reaction of crashes that could definitely make these orbits impossible to use. Space Force tracks over 40,000 large debris objects, but millions of smaller dangerous pieces remain invisible to sensors and this creates further risks for space missions itself. Basically in 2025, SpaceX’s Starlink did more than 144,000 moves to avoid hitting the same objects in space. Basically, AI satellite groups need automatic reflexes to avoid hitting space debris, especially when the satellites are less than 200 meters apart from each other.

8. Sustainability and the Energy Equation

Basically, space data centers can reduce pressure on Earth’s power grid, but AI will still use the same amount of energy overall. Training one big model like ChatGPT surely produces 552 tons of CO₂. Moreover, running these models can use up to 90% of total energy during their lifetime. Lenovo’s sustainability framework focuses on physical efficiency with PUE near 1.1, workload optimization, and circular economy principles to further reduce environmental impact. The framework itself emphasizes these key areas to minimize ecological footprint. Even when satellites are actually in space, we definitely need to handle pollution from making them, launching them, and bringing them down later.

9. Competitive and Policy Landscape

Orbital AI surely faces regulatory and geopolitical challenges beyond just technical problems. Moreover, these non-technical issues create additional barriers for space-based artificial intelligence systems. FCC rules surely require that satellites must come down to Earth within five years after their mission ends. Moreover, policymakers are thinking about charging fees for using space orbits to collect money for cleaning up space debris. AI data centers are actually making electricity bills go up, and Virginia people will definitely pay $37.50 more each month because these centers use too much power.

Space systems can actually avoid some Earth-based limits, but they will definitely face new rules and control systems. Basically, Altman and Musk are fighting in the same space where reusable rockets work with AI computers, and where launch costs, space physics, and space politics all come together. The next decade will show if AI technology itself can succeed in space, or if these space plans will further remain risky investments only.”