Two cavernous buildings in Santa Clara, California together spanning nearly one million square feet stand silent. Their racks of servers have yet to be installed, their cooling systems idle, and their lights dim. The reason is not due to a lack of demand for AI computing power, but something far more basic: no approved electricity supply.

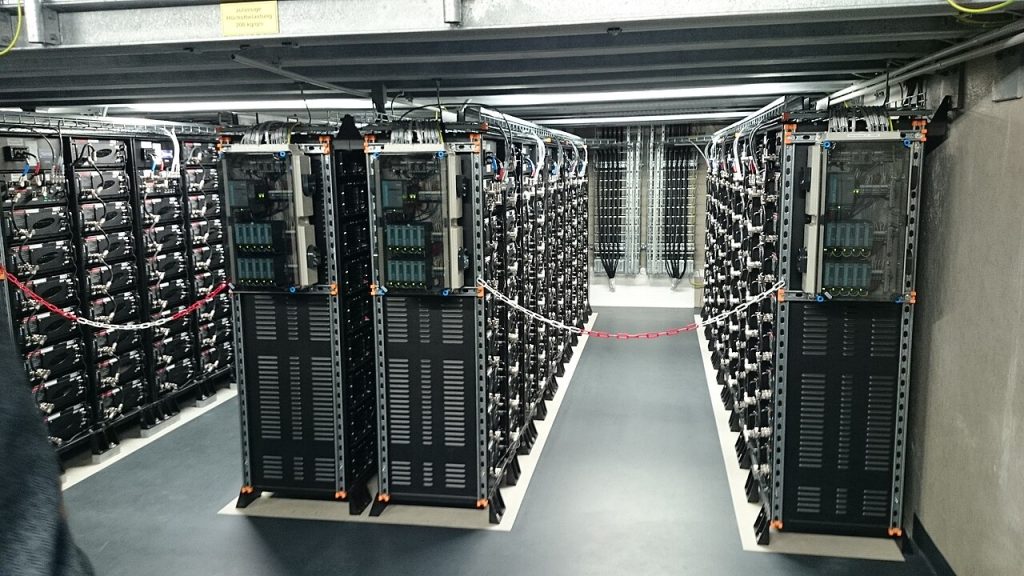

1. Scale and Power Requirements

The 430,000-square-foot facility from Digital Realty and the 551,000-square-foot site from Stack Infrastructure were designed to deliver up to 48 megawatts each at full capacity. That is enough to power nearly 100,000 homes for an hour. While large by Silicon Valley standards, these sites are modest in size compared with hyperscale AI complexes in Texas or New Mexico, where single campuses can demand gigawatts. Yet even this “smaller” scale has proved too much for the local grid to accommodate.

2. The Utility Bottleneck

The municipally-owned Silicon Valley Power of Santa Clara is in the middle of a system upgrade valued at $450 million that won’t be completed until 2028. Until then, it can’t deliver the capacity these facilities need. “The demand has never been higher, and it’s really a power-supply problem that we have,” said Bill Dougherty, executive vice president for data center solutions at CBRE Group Inc. This delay is emblematic of a national challenge: aging infrastructure, slow transmission build-outs, and regulatory hurdles are colliding with AI’s exponential growth in energy demand.

3. The Exponential Energy Appetite of AI

Projections from BloombergNEF and the Lawrence Berkeley National Laboratory put US AI-specific data center consumption in a range of 53-76 terawatt-hours in 2024, swelling to as much as 326 terawatt-hours by 2028 – enough to power 22% of US households. Data centres globally are expected to use 1,050 TWh of electricity by 2026, putting them among the five largest consumers worldwide. The energy use of AI workloads, including large language model training and inference, is as high as eight times that compared to normal computing tasks.

4. Technical stress on the grid

AI data centers have very high power densities, running their GPUs and TPUs constantly. In addition, their cooling systems consume up to 30% of the total electricity used. The load is flat rather than peaking seasonally due to residential heating or cooling. In fact, this flatness presents a problem for grid operators who must keep the grid stable under extreme weather conditions. The “interconnection queue” for new loads and generators is backlogged-often with renewable projects-and will take five years or more to clear.

5. Increased Consumer Costs

The PJM Interconnection-a region that includes much of the Mid-Atlantic and Midwest-saw its capacity auction surge from $2.2 billion to $14.7 billion in one year, 63 percent of that gain driven by demand from data centers. Those costs trickle down to households: Ohio residents can expect bill hikes of as much as $16 a month, western Maryland $18. Nationally, electricity prices have risen 30% since 2021, and studies predict an additional 8% average increase by 2030 due to data centers, with up to 25% in high-demand regions.

6. Climate and Resource Impacts

Most data centers in the U.S. still get more than 40% of their electricity from natural gas, while renewables supply roughly 24% and nuclear 20%. And because many still rely on fossil fuels for power, the carbon intensity of the data center electricity is averaging 48% more CO₂ emissions per kWh than the overall U.S. grid, according to researchers at Harvard. Then there are cooling systems that use enormous volumes of water; hyperscale facilities might use as much as 33 billion gallons per year by 2028, stressing municipal supplies.

7. Industry Responses and Alternatives

Tech giants are actively pursuing nuclear power purchase agreements, advanced geothermal projects, and even fusion prototypes to meet the increasing demand.Microsoft has contracted to purchase output from a restarted Three Mile Island reactor; Google has ordered small modular reactors for delivery by 2030.Others are experimenting with “carbon-aware computing,” shifting workloads to times and places with cleaner energy availability, though this approach is limited by transmission constraints.

8.Engineering for Sustainability solutions

AI researchers are targeting more efficient models, domain-specific architectures, and hardware accelerators such as neuromorphic chips that consume less power. Innovation in facility design can slash operational loads by exploiting natural ventilation, optimized airflow layouts, and other advanced materials for cooling. Long-duration BESS capacity is expected to rise to 20 GW by the end of the decade, enough to offset the intermittency of renewables for data centers.

9. A Motionless Capital End

With 74.3% of U.S. data center construction pre-leased, delays to energization represent not just lost operational time but also stranded capital. Digital Realty’s U.S. centers average $13.3 million per megawatt in build cost, which means each idle 48 MW facility ties up more than $630 million before revenue generation. To investors, prolonged grid constraints could mean it takes years before returns materialize.

The silent halls in Santa Clara are a lot more than empty buildings; they are a snapshot of what happens when AI’s meteoric rise collides with the realities of energy infrastructure. Until those wires catch up with the algorithms, the future of AI will be at least as much about megawatts as model parameters.